- Weaviate Newsletter

- Posts

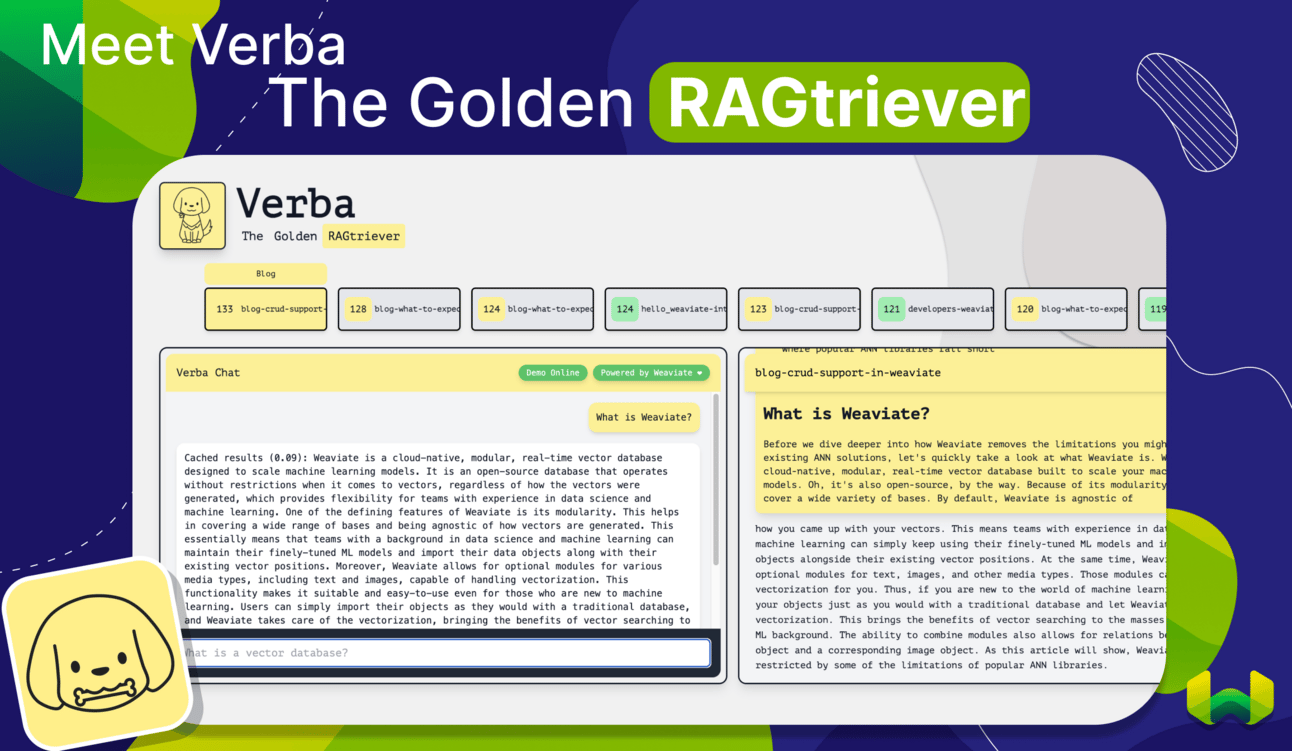

- Provide LLMs with your internal knowledge base to generate specified outputs!

Provide LLMs with your internal knowledge base to generate specified outputs!

Query Weaviate without learning any syntax via our self-trained Gorilla LLM

Build your own knowledge extraction pipeline to make large language models produce specific outputs that contextualize your internal knowledge base with Verba. We are also excited to introduce you to our new fine-tuned Gorilla LLM, which helps you generate valid GraphQL queries for Weaviate without knowing any syntax.

Let’s dive into the details!

Verba

Welcome the newest Weaviate member, Verba, the Golden RAGtriever! Verba is an open-source full-stack retrieval augmented generation (RAG) - hence the name - application. RAG combines information retrieval and text generation to leverage large language models (LLMs) to work on custom data. Verba is equipped with Weaviate’s documentation, blog posts, and YouTube videos as the data source.

Just an example how Verba could help you build with Weaviate: you’d like to learn how to use the generative search module. Verba will output the response along with code snippets rather than only pointing to the documentation or blog that covers generative search.

The cherry on top is Verba will link the source, which is excellent when you want to dive a bit deeper.

By default, Verba uses Weaviate data, however, you have the flexibility to swap it for your own data. The directions on how to do this is in the GitHub repository.

The current stack uses Weaviate, FastAPI, and React frontend. Additionally, the vectorization is done with OpenAI; however, we are working on adding open-source alternatives. This is an ongoing project, so stay tuned for more updates!

Check out these resources:

Test out Verba

Weaviate Gorilla

Gorilla enables large language models (LLMs) to use various APIs. We have begun exploring the Gorilla system for Weaviate!

Gorilla describes LLMs fine-tuned to write requests for a particular set of APIs. In this work, we took the 46 GraphQL Search APIs in Weaviate’s documentation and tasked GPT-4 to write a query for each of them with 50 synthetic schemas. This resulted in a total of 2,300 examples. We then fine-tuned the LlaMA 7B model on this dataset and evaluated the results.

Similarly to Verba, Weaviate Gorilla is a retrieval augmented generation (RAG) system. Weaviate Gorilla retrieves the custom database schema, as well as the appropriate API reference to facilitate the generation given a natural language command as input. There are many exciting opportunities for the Weaviate Python Gorilla and the Weaviate Integration Gorilla!

See these resources by Connor Shorten:

Thank you for reading

Do you have any questions about Weaviate, vector databases, documentation, or other topics? Feel free to explore the Weaviate Forum, where you can engage in community conversations.

Bye for now,

Erika